Concept: A Dialogue Between Two Eras

This project began years ago as a pure, optimistic celebration of "Diversity." The vision was simple yet ambitious: to place artists of all genders, nationalities, and backgrounds in the absolute center of a 1x1 canvas, connecting them through a seamless match-cut animation. At that time, the future of art felt bright, inclusive, and purely human-driven.

Fast forward to 2025, and the landscape has shifted. The rise of Generative AI has fundamentally reshaped our industry, adding layers of complexity—and arguably, irony—to the original concept of "human diversity."

Rather than letting these illustrations gather dust as relics of a past mindset, I chose to use them as a testing ground for the very technology that is disrupting our field. I wanted to see if I could use AI not to replace the artist, but to finish what I started. This piece is no longer just about cultural diversity; it is an experiment in technological integration, exploring how a designer's original intent can survive and evolve in the age of algorithms.

R&D: The Quest for the Perfect Interpolation

To achieve the "Match Cut" effect, I needed an AI model that could respect my original start and end keyframes without hallucinating unwanted details. I conducted a rigorous stress test across the leading Image-to-Video models. Here is my breakdown of how they handle stylized illustration:

Kling AI (可灵): Currently the most balanced performer for character consistency. It excelled at understanding the physical logic of the movement between my keyframes, though it occasionally struggled with the highly stylized textures of my illustrations.

Runway Gen-3 Alpha: Produces the most "cinematic" motion with high contrast, but it tends to be too creative. It often ignored my end-frame constraints in favor of a more dramatic, but inaccurate, morph. Great for vibes, tricky for precision.

Luma Dream Machine: Strong physics simulation, but for 2D illustrations, it sometimes interpreted the flat colors as 3D geometry, causing characters to "melt" or distort unpleasantly during the transition.

Hailuo AI (海螺) & Jimeng (即梦): Surprisingly effective for stylized and anime-adjacent aesthetics. These models showed a higher sensitivity to the "flatness" of the illustration style, preserving the artistic stroke better than the models trained heavily on photorealism.

The Verdict: I utilized a hybrid approach, selecting the best output from Jimeng for the final composite.

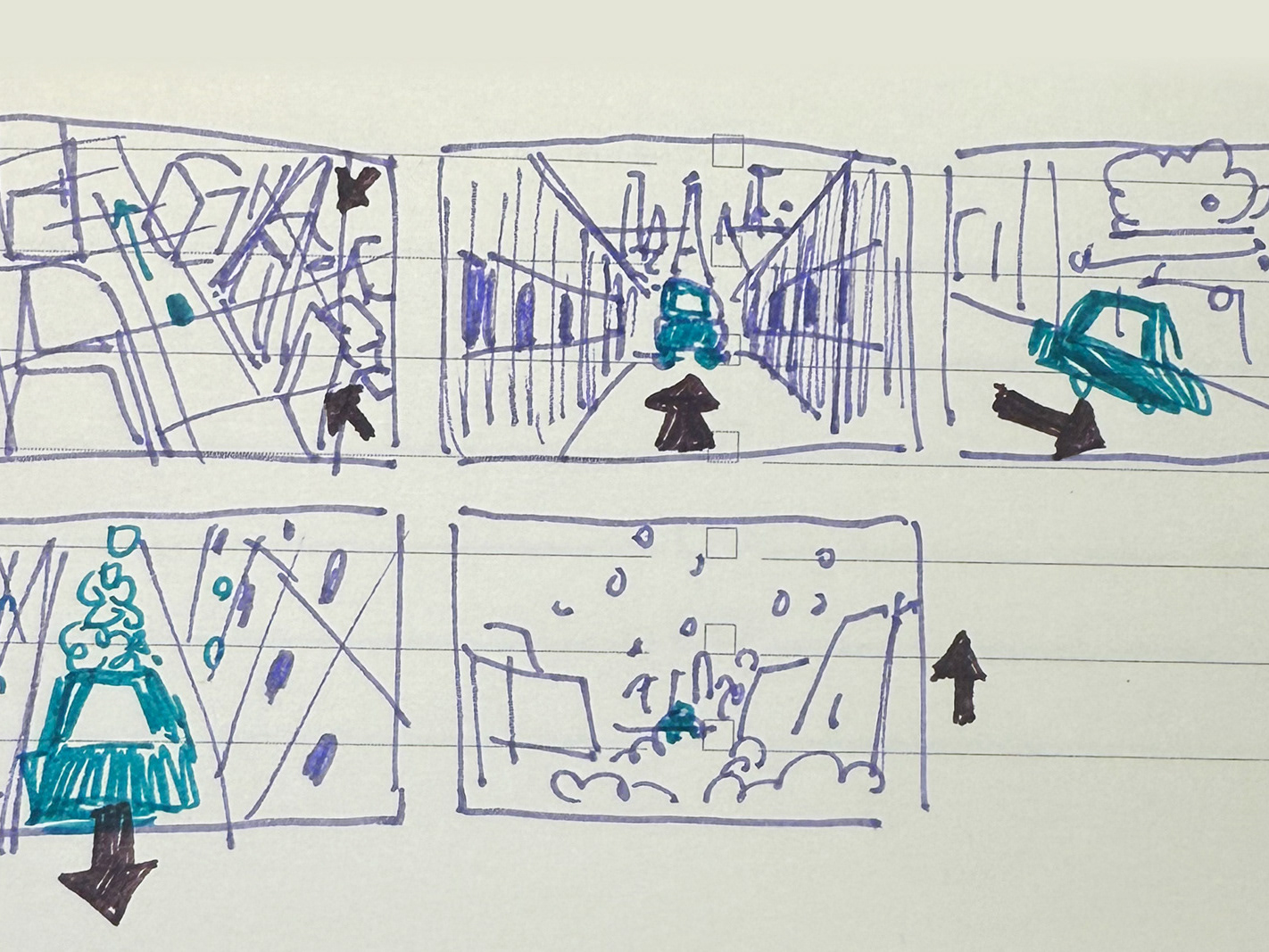

Some bad generate:

Generated via precise prompt engineering for specific motion control:

The Workflow: Reclaiming Control with After Effects

AI generation was only the beginning. The raw outputs from these models are often linear and lack the rhythmic "snap" required for professional motion design. To elevate the generated clips into a cohesive animation, I developed a specific pipeline:

Overshooting the Duration: I intentionally generated longer clips than necessary. This gave me extra handle frames to work with in post-production.

Rhythmic Time Remapping: Raw AI motion tends to "drift." In After Effects, I applied aggressive time-remapping curves to the generated footage. By compressing the transition moments and easing into the hold frames, I created a snappy, deliberate rhythm that mimics hand-animated match cuts.

The "End Frame" Problem: Even with Start/End frame inputs, AI rarely lands perfectly on the target pixel-perfectly. I manually color-graded and composited the final frames to ensure a seamless loop, fixing the subtle color shifts and artifacts introduced by the diffusion process.

Final Polish: Sound design was added to drive the cuts, unifying the disparate characters into a single, flowing narrative.